In the case of GDDR5 and GDDR6 memories, which are one of the newest forms of GPU memory standards. Originally, general-purpose buses such as the VMEbus and S-100 bus were implemented, but contemporary memory buses are designed to connect directly to VRAM chips to reduce latency. When it comes to memory bandwidth, latency is a second factor to consider.

#Amd link gpu memory series

The POD125 standard, for example, is used by the A4000, A5000, and A6000 NVIDIA Ampere series graphics cards you can find available for Paperspace users, which essentially describes the communication protocol with GDDR6 vRAMs.

#Amd link gpu memory serial

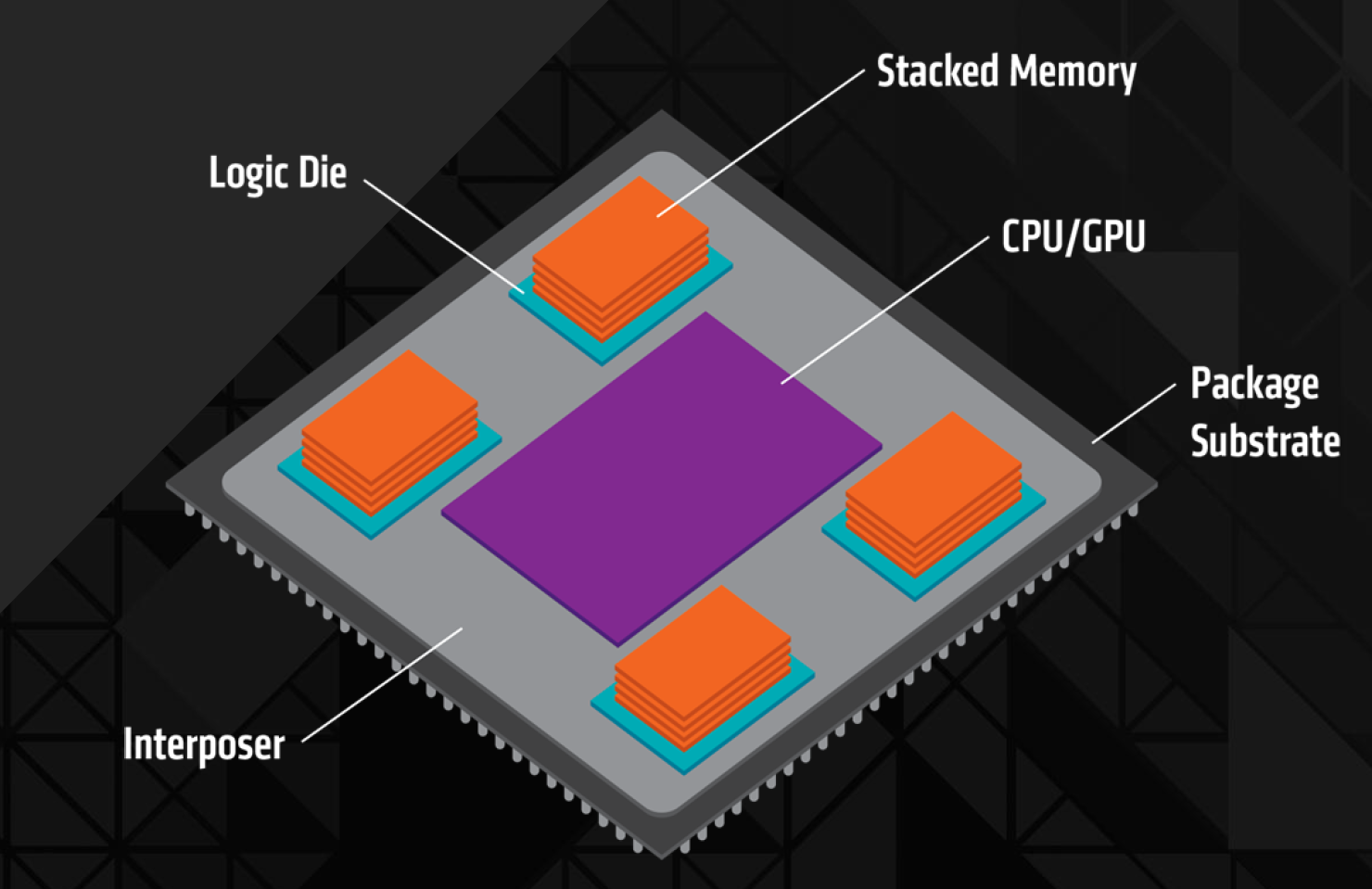

As a result, NVIDIA and AMD are more likely to employ standardized serial point-to-point buses in their graphics cards. So, in establishing maximum memory throughput on a GPU, the memory interface is also an important part of the memory bandwidth calculation. A 384-bit memory interface allows 384 bits of data to be transferred each clock cycle. The physical count of bits that may fit along the bus every clock cycle is the width of this interface, which is usually described as "384-bit" or something similar. Data is sent to and from the on-card memory every clock cycle (billions of times per second). A memory interface is the physical bit-width of the memory bus as it relates to the GPU. Throughout a computer system, there are numerous memory interfaces. Nvidia GTX 780 PCB Layout, Sourceįor a graphics card, the computing unit (GPU) is connected to the memory unit (VRAM, short for Video Random Access Memory) via a Bus called the memory interface. A GPU, on the other hand, is designed to do the complicated mathematical and geometric calculations required for graphics rendering or other machine learning related applications. The graphics processing unit (GPU) on a graphics card is somewhat analogous to the CPU on a computer’s motherboard.

It also has a BIOS chip, which retains the card's settings and performs startup diagnostics on the memory, input, and output. The basic GPU anatomyĪ graphics card, like a motherboard, is a printed circuit board that holds a processor, a memory, and a power management unit. It is, nevertheless, sometimes easy to overlook. Understanding the memory needs for machine learning is an important component of the development process of a model.

We will dive into what GPU memory bandwidth is and look at why it should be taken into consideration as one of the qualities an ML expert should look for in a machine learning platform. This blog breaks down one of the most overlooked GPU characteristics: memory bandwidth.

0 kommentar(er)

0 kommentar(er)